By Illumi Huang, Senior Industry Analyst, EE Times

Over the past several years, event-based vision sensors (EVS) have attracted continuous attention across the semiconductor and AI industries. Unlike conventional CMOS image sensors (CIS), these bio-inspired devices detect only changes in a scene rather than continuously sampling static imagery. Each pixel operates independently, triggering events when illumination changes exceed a threshold. This architecture enables successive temporal perception, ultra-high effective frame rates reaching tens of thousands of frames per second, and exceptionally wide dynamic range.

These characteristics make EVS an appealing candidate for applications ranging from automotive safety and industrial automation to medical imaging and consumer electronics. Yet despite years of research activities and several commercial launches, adoption has remained uneven—particularly in consumer markets.

In a recent interview, AlpsenTek founder and CEO Jian Deng offered a broader system-level perspective on why EVS has struggled to scale, and how Hybrid Vision Sensors (HVS) may provide a more practical path forward.

“The AI era demands multi-dimensional, multi-modal understanding of external worlds,” Deng explained. “Take intelligent driving as an example. A robust vision system ideally needs multiple dimensions of information: the two-dimensional image plane; depth; color or spectral data; and, critically, time and motion. These five dimensions together form the foundation of modern machine vision.”

Traditional image sensors supply spatial detail. Time-of-flight and other ranging sensors add depth. Spectral sensors extend color information. Time and motion can be extracted from video streams—but at a significant cost. Conventional video relies on fixed frame rates, produces large volumes of redundant data, and requires substantial computation to extract meaningful motion cues.

“Event-based vision works differently,” Deng said. “Inspired by the human retina, it encodes only changes as sparse event signals. It complements frame-based sensors by capturing motion information directly, efficiently, and consecutively.”

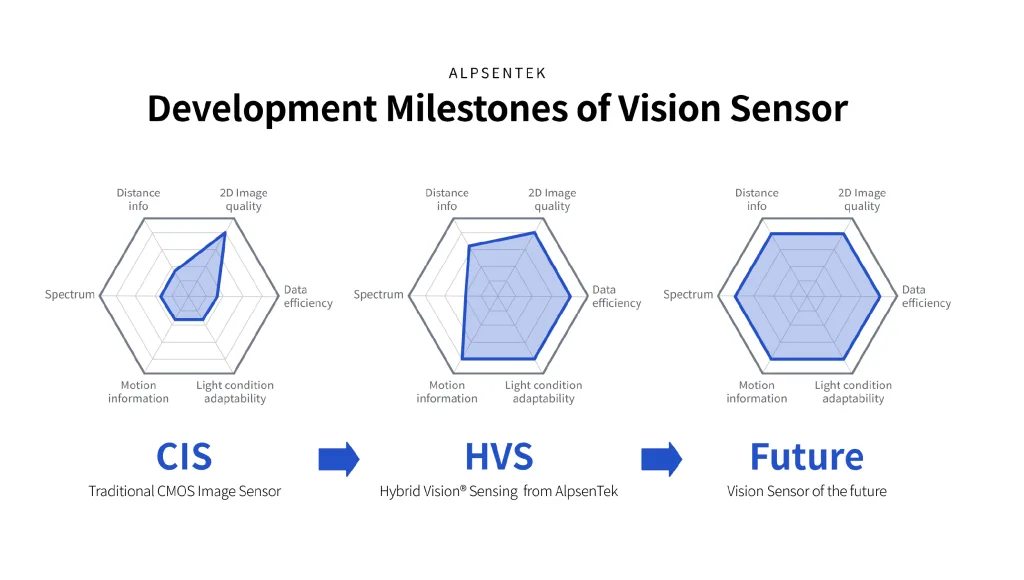

Figure 1: Evolution of vision sensors from traditional 2D CMOS image sensors (CIS) to Hybrid Vision Sensors (HVS), and toward future multi-dimensional vision sensors with improved motion awareness, data efficiency, and environmental adaptability.

Since its founding in 2019, AlpsenTek has focused on integrating these two paradigms into a single device. Its Hybrid Vision Sensor fuses event-based and frame-based sensing at the pixel level. Over time, the company plans to further integrate additional modalities, including distance measurement and spectral sensing, to create what Deng describes as a “full-stack” vision sensor for next-generation AI systems.

Against this backdrop, the interview explored five pivotal questions that define the commercial and technical trajectory of HVS.

1. What Exactly Is the Role of HVS (Hybrid Vision Sensor)?

At its core, an HVS integrates conventional imaging with event-based vision within a single sensor. While the principles of EVS have been widely covered (EVS offer several well-recognized advantages, including low data output, fast response, and high dynamic range), the defining characteristic of HVS is its positioning: it is first and foremost an image sensor, not a pure event sensor.

According to AlpsenTek, HVS implements proprietary pixel-level fusion by integrating image-sampling circuits and event-detection circuits within the same pixel array. Using in-pixel sensing and processing architectures—such as iampCOMB, GESP, and IN-PULSE DiADC—along with time-interleaved readout technologies like TILTech and PixMUX, HVS supports three operating modes: conventional image mode, event mode, and hybrid mode.

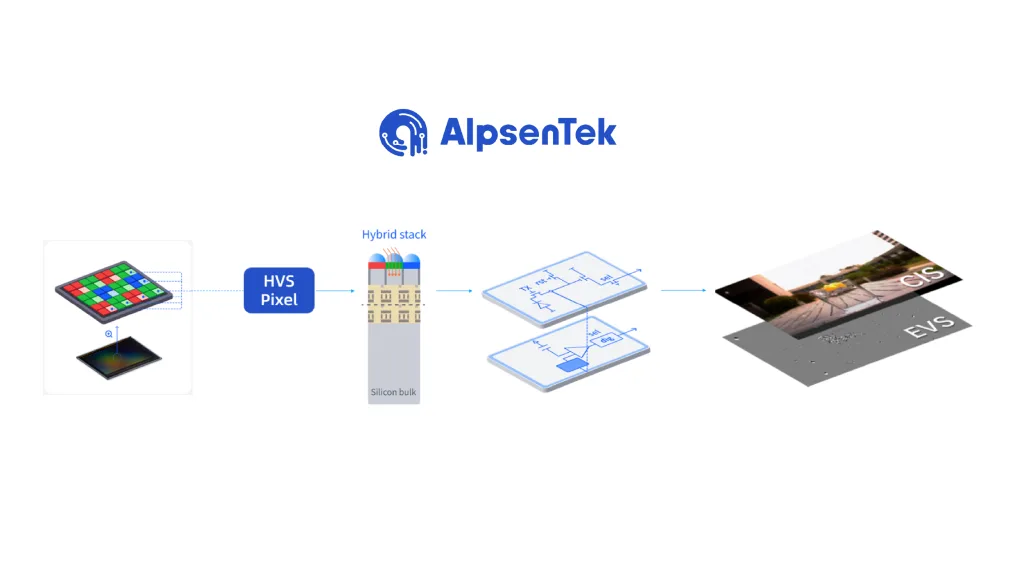

Figure 2: Hybrid Vision Technology integrates APS and EVS across the full stack—from sensor architecture and pixel design to data processing and application-level deployment.

While Deng did not disclose detailed pixel architectures, he emphasized that HVS should be understood as an evolutionary step for CIS rather than a parallel technology. In many applications, it functions as an imaging sensor primary rather than a supplementary device.

This distinction is important when comparing HVS with pure EVS sensors. “Pure event sensors can exceed 10,000 frames per second,” Deng noted. “They are well suited for ultra-high speed scenarios, where cost sensitivity is lower.” HVS, by contrast, balances multiple metrics: frame rate, dynamic range, pixel size, power consumption, data volume, and cost.

In contrast to pure event-based sensors, HVS is capable of delivering high-quality image data. Through ongoing optimization, AlpsenTek’s HVS sensors can easily achieve an equivalent frame rate of 1,000 fps and a dynamic range of 120 dB, while maintaining significantly lower cost than pure event sensors. This balance makes it suitable for high-volume markets.

Deng also highlighted a broader industry trend toward sensor integration. In smartphones, for example, ToF and spectral sensors already support imaging pipelines. Over time, these modalities are increasingly being absorbed directly into image sensors.

“Our roadmap follows this direction,” Deng said. “Event-based sensing will eventually be integrated into the main or ultra-wide camera. It becomes part of the sensor, not a separate component.”

Figure 3: An HVS hybrid sensor designed for smartphones enables differentiated imaging performance, improved authenticity, and more computationally efficient on-device AI and edge sensing.

From AlpsenTek’s perspective, HVS addresses a fundamental gap in data registration of vision systems. “Authentic motion information is essential,” Deng said. “There is no other sensing approach that captures motion as efficiently as EVS does. But motion data must ultimately be mapped or registered with spatial image data within temporal-spatial coordinates. That is where HVS has a natural advantage.”

2. What Can HVS Do? Application Scenarios

In principle, any application suited to event cameras can also benefit from HVS. In practice, the ability to output standard image data significantly broadens the range of viable use cases.

Automotive systems illustrate this advantage. Event-based sensing offers high dynamic range, fast response, low latency, and low data redundancy—attributes well suited to environments with rapidly changing lighting. Deng described event sensing as enabling “pre-emptive assessment.”

“Traditional image sensors deliver rich visual detail,” he said. “But exposure takes time, and extracting critical information from that detail also takes time. This lowers system response speed.”

Figure 4: In rapidly changing lighting conditions, EVS offers superior dynamic range and reduced data volume, enabling faster obstacle detection, shorter braking response times, and improved active safety.

Event-based sensing, by contrast, can quickly provide coarse input data for vehicles, such as the presence of fast-moving objects. These cues then trigger more detailed semantic analysis through sophisticated algorithms, reducing response time, improving safety, and lowering power consumption.

In smartphones, HVS supports motion capture with high temporal resolution, even under low-light conditions. Motion information can significantly improve image enhancement results such as deblurring, HDR imaging, and video frame interpolation. For video capture and AI-based post-processing (AI editing), it provides a sparse and consecutive event-based reference that maintains temporal consistency across distinctively-gapped image frames.

According to Deng, AlpsenTek has also developed compact HVS devices designed to align more directly with smartphone main cameras, reducing the complexity of registering heterogeneous data from separate sensors. This method emerged through close collaboration with smartphone OEMs and aims to integrate HVS directly into phone camera modules.

From a system perspective, HVS contributes across the imaging pipeline. Before capture, it assists with metering and focus. During exposure, it continuously records motion and changes. After capture, it improves frame registration and supports advanced processing such as deblurring, HDR, and frame interpolation.

In 2023, AlpsenTek introduced its first mass-produced HVS, the APX003 (previously known as ALPIX-Eiger). Current deployments fall into two main categories. One targets rapid-sensing applications such as robots and action cameras. The other positions HVS as a low-power, data-distilled and feature-extracted sensor for edge AI, supporting XR devices, smartphone front cameras, smart-home devices, and traffic surveillance.

Deng repeatedly emphasized that HVS is fundamentally an AI sensor. “Training AI systems with high-frame-rate video is extremely inefficient,” he said. “Most of the data is not informative, yet it drives enormous storage and computation costs.”

Event-based sensing performs data distillation directly at the sensor, filtering out non-contributory information. As a result, it enables more efficient physical AI and embodied systems that interact with the real world in real time.

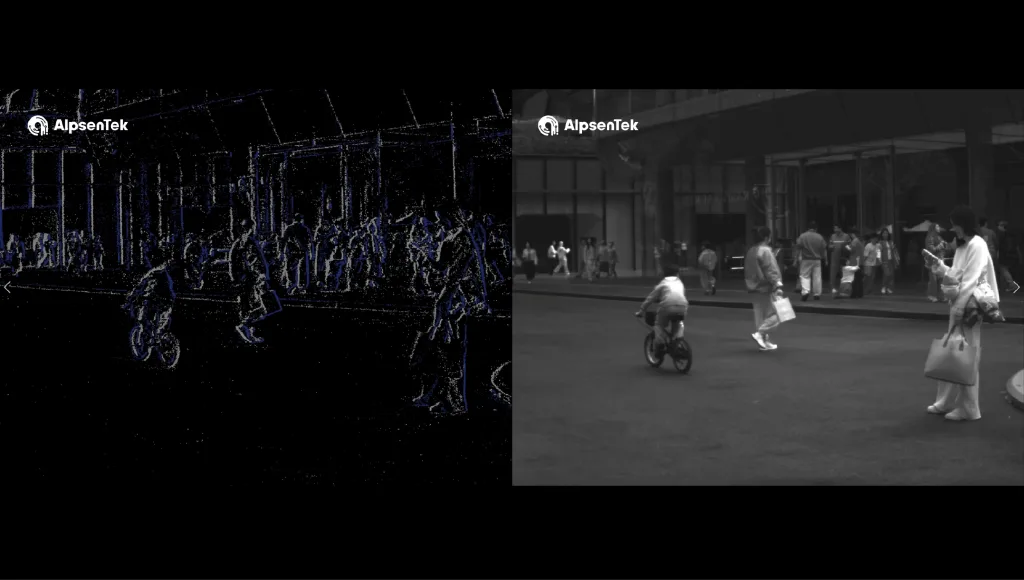

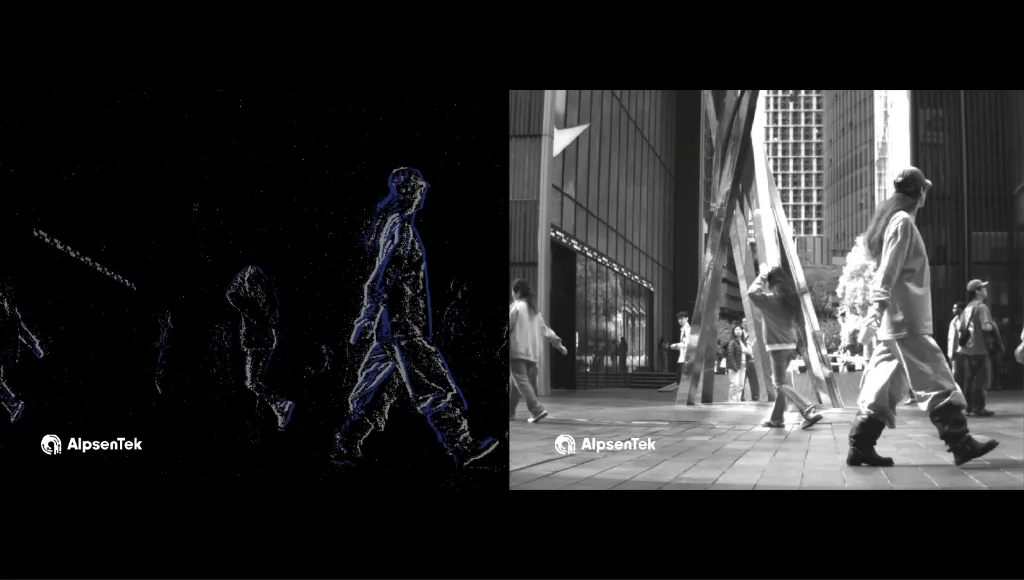

Figure 5: Comparison of 1.3-MP EVS output (left) and 1.3-MP APS output (right) from the APX014 (ALPIX-Pizol) hybrid sensor, featuring a global shutter and monochrome output

3. Why Pursue Pixel-Level Fusion?

Given the availability of multi-sensor systems, a natural question arises: why pursue tightly integrated, pixel-level fusion rather than using separate sensors?

The challenge lies in the fundamental mismatch between image and event sensor architectures. Event sensors effectively compute temporal derivatives of photoelectric signals, often operating on logarithmic light intensity and triggering event signals based on threshold changes. Image sensors, by contrast, integrate light over time to form frames.

Deng acknowledged this difficulty but stressed that HVS is designed as an image sensor with integrated event capability, not a mere juxtaposition of two distinct sensors. This design choice enables its pixel arrays comparable to CIS. AlpsenTek has demonstrated a roadmap scaling down to smaller pixel size, with a 1.89 µm HVS already in mass production. By comparison, some commercially available pure event sensors such as IMX636 use pixels larger than 4 µm.

Although AlpsenTek’s roadmap includes high-resolution HVS devices, Deng cautioned that there is no practical value for put every pixel “hybrid”. Motion information supplied by EVS are typically sparse and coarse, yet excessive event pixel counts increase data volume and cost without proportional benefit.

As a result, AlpsenTek specifies APS and EVS resolutions separately, allowing event resolution to be tailored to application needs.

The core value of pixel-level fusion, Deng concluded, is native spatiotemporal alignment. When image and event data originate from the same pixel with subtle readout circuit design, spatial and temporal registration is inherent. This eliminates the need for external synchronization and computationally expensive post-alignment.

Dual-sensor architectures face intrinsically challenges in aligning heterogeneous data operating at different sampling rates. These challenges have contributed to the limited success of earlier attempts to introduce pure event sensors into consumer devices.

“Supporting event data also requires changes in algorithm frameworks and data structures,” Deng noted. “The development burden is huge, and the return on investment is often disproportionate.”

4. When Will HVS Reach Mass-Market Volume?

The difficulties faced by earlier event-sensor suppliers offer important lessons. Standalone event sensors struggled with integration, data handling, and unclear product positioning. Many suppliers also lacked deep engagement with smartphone OEMs.

Deng acknowledged the role of early pioneers in building awareness and ecosystem foundations. He compared the trajectory to ToF sensors, which required years to move from emergence to widespread smartphone adoption.

Figure 6: Pedestrian motion captured by the APX014 (ALPIX-Pizol) hybrid sensor, showing 1.3-MP EVS data (left) alongside the corresponding 1.3-MP APS image (right), with global shutter and monochrome output.

In Deng’s view, the timing is now more favorable. On the demand side, AI applications increasingly require efficient motion perception and low power consumption. On the supply side, HVS technology has matured to deliver practical ROI.

By distilling data at the sensor level, HVS aligns well with the needs of compact edge devices. AlpsenTek’s sensors are already shipping in wearables, AIoT, smart transportation, and security applications.

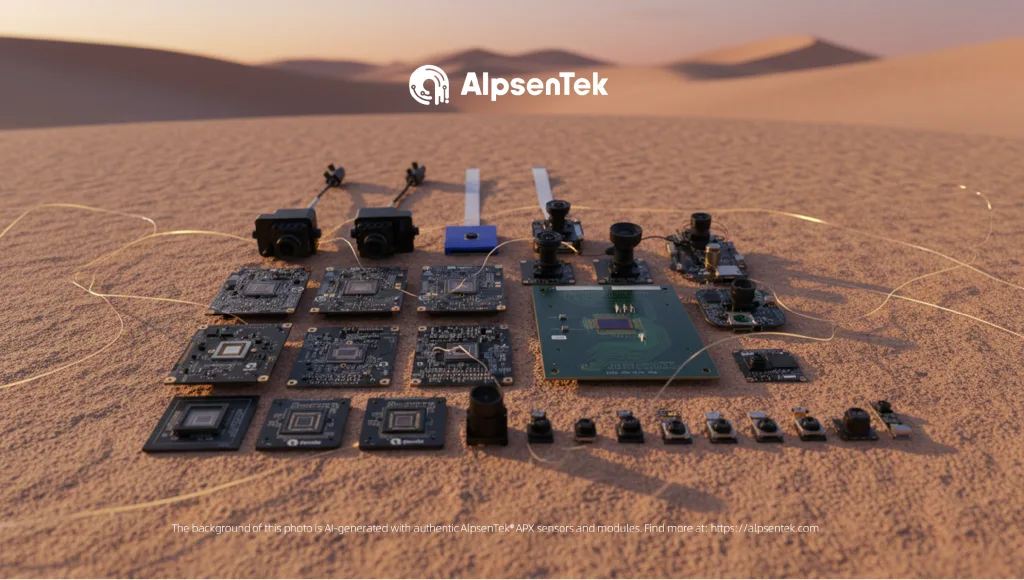

Figure 7: A portfolio of AlpsenTek APX-series hybrid vision sensors and camera modules, illustrating the company’s range of HVS products for diverse applications.

“We expect 2026 and 2027 to be a period of rapid growth,” Deng said. “AlpsenTek is currently the only company that brought this type of sensor into mass production, and we have already completed extensive algorithm and system development.”

Importantly, HVS manufacturing relies on standard image-sensor process flows, rather than specialized fabrication steps. As a result, Deng does not anticipate production capacity becoming a bottleneck as demand increases.

5. How Mature Are the Technology and Development Ecosystems?

In April 2025, AlpsenTek completed Series B financing round, with investors including Wisdom Internet Industry Fund, PuXin Capital, CRRC Times Investment, and others. Prior to this, AlpsenTek had secured four rounds of funding backed by leading industry players and funds, including Lenovo, OPPO, Goertek, ArcSoft, Sunny Optical, as well as Casstar, Zero2IPO, Glory Ventures and so forth (with some participating across multiple rounds). This sustained investment reflects long-term confidence in HVS technology.

From its founding in 2019 to the launch of its first HVS products in 2023, the company addressed numerous challenges, including pixel integration, interference mitigation, process optimization, and breaking mechanical constraints for camera modules.

Beyond hardware, Deng emphasized the importance of software and systems. A significant portion of AlpsenTek’s team focuses on hybrid-oriented algorithms and system development. The company delivers not only sensors, but also development boards, reference designs, SDKs, and datasets.

Algorithm development spans three layers: hardware-optimized core operators for 2-bit event data, driver-level models and SDKs such as motion and human detection, pose and gesture recognition, and application-layer frameworks formulated by customers. AlpsenTek also provides event datasets tailored to specific scenarios to lower development barriers.

The company collaborates with academic top tier institutions globally, and sponsored top academic conference on computer vision and others. These partnerships support frontier research in multi-modal sensing and perception and ecosystem development.

“The HVS ecosystem is a long-term effort,” Deng concluded. “As more participants adopt the technology, applications mature, iteration accelerates, and the ecosystem enters a positive cycle.”

Link of this Article on EETimes global:

https://www.eetimes.com/reshaping-the-eye-of-ai-how-will-hvs-transform-ai-machine-vision-in-2026